|

Dolby noise-reduction system

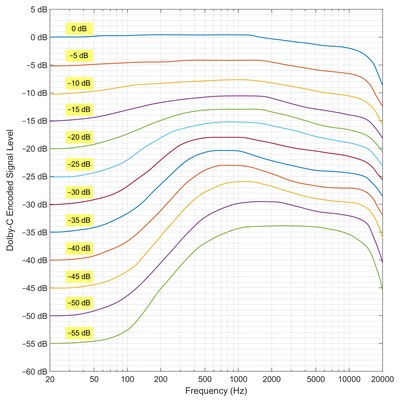

A Dolby noise-reduction system, or Dolby NR, is one of a series of noise reduction systems developed by Dolby Laboratories for use in analog audio tape recording.[1] The first was Dolby A, a professional broadband noise reduction system for recording studios that was first demonstrated in 1965, but the best-known is Dolby B (introduced in 1968), a sliding band system for the consumer market, which helped make high fidelity practical on cassette tapes, which used a relatively noisy tape size and speed. It is common on high-fidelity stereo tape players and recorders to the present day, although Dolby has as of 2016 ceased licensing the technology for new cassette decks. Of the noise reduction systems, Dolby A and Dolby SR were developed for professional use. Dolby B, C, and S were designed for the consumer market. Aside from Dolby HX, all the Dolby variants work by companding: compressing the dynamic range of the sound during recording, and expanding it during playback. ProcessWhen recording a signal on magnetic tape, there is a low level of noise in the background which sounds like hissing. One solution to this issue is to use low-noise tape, which records more signal, and less noise. Other solutions are to run the tape at a higher speed or use a wider tape. Cassette tapes were originally designed to trade off fidelity for the convenience of recording voice by using a very narrow tape running at a very slow speed of 1+7⁄8 in/s (4.8 cm/s) housed in a simple plastic shell when 15 in/s (38 cm/s) or 7+1⁄2 in/s (19 cm/s) tape speeds were for high fidelity, and 3+3⁄4 in/s (9.5 cm/s) was of lower fidelity. As a result of their narrow tracks and slow speed, cassettes make tape hiss a very severe problem.  Dolby noise reduction is a form of dynamic pre-emphasis employed during recording, plus a form of dynamic de-emphasis used during playback, which work in tandem to improve the signal-to-noise ratio. The signal-to-noise ratio is simply how large the music signal is compared to the low level of tape noise with no signal. When the music is loud, the low background hiss level is not noticeable, but when the music is soft or in silence, most or all of what can be heard is the noise. If the recording level is adjusted so that the music is always loud, then the low-level noise would not be audible. One cannot simply increase the volume of the recording to achieve this end; tapes have a maximum volume they can record, so already-loud sounds will become distorted. The idea is to increase the volume of the recording only when the original material is not already loud, and then reduce the volume by the same amount on playback so that the signal returns to the original volume levels. When the volume is reduced on playback, the noise level is reduced by the same amount. This basic concept, increasing the volume to overwhelm inherent noise, is known as pre-emphasis, and is found in a number of products. On top of this basic concept, Dolby noise reduction systems add another improvement. This takes into account the fact that tape noise is largely heard at frequencies above 1,000 Hz. It is the lower-frequency sounds that are often loud, like drum beats, so by only applying the companding to certain frequencies, the total amount of distortion of the original signal can be reduced and focused only on the problematic frequencies. The differences in the various Dolby products are largely evident in the precise set of frequencies that they use and the amount of modification of the original signal volume that is applied to each of the frequency bands. Within each band, the amount of pre-emphasis applied depends on the original signal volume. For instance, in Dolby B, a low-level signal will be boosted by 10 dB, while signals at the "Dolby Level", +3 VU, receive no signal modification at all. Between the two limits, a varying level of pre-emphasis is applied. On playback, the opposite process is applied (de-emphasis), based on the relative signal component above 1 kHz. Thus, as this portion of the signal decreases in amplitude, the higher frequencies are progressively increasingly attenuated, which also reduces in level the constant background noise on the tape when and where it would be most noticeable. The two processes (pre- and de-emphasis) are intended to cancel each other out as far as the actual recorded program material is concerned. During playback, only de-emphasis is applied to the incoming off-tape signal and noise. After playback de-emphasis is complete, the apparent noise in the output signal is reduced, and this process should not produce any other effect noticeable to the listener other than reduced background noise. However, playback without noise reduction produces a noticeably brighter sound. The correct calibration of the recording and playback circuitry is critical in order to ensure faithful reproduction of the original program content. The calibration can easily be upset by poor-quality tape, dirty or misaligned recording/playback heads, or using inappropriate bias levels/frequency for the tape formulation, as well as tape speed when recording or duplicating. This can manifest itself as muffled-sounding playback, or "breathing" of the noise level as the volume level of the signal varies. On some high-end consumer equipment, a Dolby calibration control is included. For recording, a reference tone at Dolby Level may be recorded for accurate playback level calibration on another transport. At playback, the same recorded tone should produce the identical output, as indicated by a Dolby logo marking at approximately +3 VU on the VU meter(s). In consumer equipment, Dolby Level is defined as 200 nWb/m, and calibration tapes were available to assist with the task of correct level setting. For accurate off-the-tape monitoring during recording on 3-head tape decks, both processes must be employed at once, and circuitry provided to accomplish this is marketed under the "Double Dolby" label. Dolby ADolby A-type noise reduction was the Dolby company's first noise reduction system, presented in 1965.[2][3] It was intended for use in professional recording studios, where it became commonplace, gaining widespread acceptance at the same time that multitrack recording became standard. The input signal is split into frequency bands by four filters with 12 dB per octave slopes, with cutoff frequencies (3 dB down points) as follows: low-pass at 80 Hz; band-pass from 80 Hz to 3 kHz; a high-pass from 3 kHz; and another high-pass at 9 kHz. (The stacking of contributions from the two high-pass bands allows greater noise reduction in the upper frequencies.) The compander circuit has a threshold of −40 dB, with a ratio of 2:1 for a compression/expansion of 10 dB. This provides about 10 dB of noise reduction increasing to a possible 15 dB at 15 kHz, according to articles written by Ray Dolby and published by the Audio Engineering Society (October 1967)[4][5] and Audio (June/July 1968).[6][7] As with the Dolby B-type system, correct matching of the compression and expansion processes is important. The calibration of the expansion (decoding) unit for magnetic tape uses a flux level of 185 nWb/m, which is the level used on industry calibration tapes such as those from Ampex; this is set to 0 VU on the tape recorder playback and to Dolby Level on the noise reduction unit. In the record (compression or encoding) mode, a characteristic tone (Dolby Tone) generated inside the noise reduction unit is set to 0 VU on the tape recorder and to 185 nWb/m on the tape. The Dolby A-type system also saw some use as the method of noise reduction in optical sound for motion pictures. In 2004, Dolby A-type noise reduction was inducted into the TECnology Hall of Fame, an honor given to "products and innovations that have had an enduring impact on the development of audio technology."[8] Dolby B  Dolby B-type noise reduction was developed after Dolby A, and it was introduced in 1968. It consisted of a single sliding band system providing about 9 dB of noise reduction (A-weighted), primarily for use with cassette tapes. It was much simpler than Dolby A and therefore much less expensive to implement in consumer products. Dolby B recordings are acceptable when played back on equipment that does not possess a Dolby B decoder, such as many inexpensive portable and car cassette players. Without the de-emphasis effect of the decoder, the sound will be perceived as brighter as high frequencies are emphasized, which can be used to offset "dull" high-frequency response in inexpensive equipment. However, Dolby B provides less effective noise reduction than Dolby A, generally by an amount of more than 3 dB. The Dolby B system is effective from approximately 1 kHz upwards; the noise reduction that is provided is 3 dB at 600 Hz, 6 dB at 1.2 kHz, 8 dB at 2.4 kHz, and 10 dB at 5 kHz. The width of the noise reduction band is variable, as it is designed to be responsive to both the amplitude and the frequency distribution of the signal. It is thus possible to obtain significant amounts of noise reduction down to quite low frequencies without causing audible modulation of the noise by the signal ("breathing").[9] From the mid-1970s, Dolby B became standard on commercially pre-recorded music cassettes even though some low-end equipment lacked decoding circuitry, although it allows for acceptable playback on such equipment. Most pre-recorded cassettes use this variant. VHS video recorders used Dolby B on linear stereo audio tracks.  Prior to the introduction of later consumer variants (Dolby C being the first), cassette hardware supporting Dolby B and cassettes encoded with it would be labeled simply "Dolby System," "Dolby NR", or wordlessly with the Dolby symbol. This continued in some record labels and hardware manufacturers even after Dolby C had been introduced, during the period when the new standard was relatively little-known. JVC's ANRS[10] system, used in place of Dolby B on earlier JVC cassette decks, is considered compatible with Dolby B. JVC eventually abandoned the ANRS standard in favor of official Dolby B support; some JVC decks exist whose noise-reduction toggles have a combined "ANRS / Dolby B" setting. Dolby FMIn the early 1970s, some expected Dolby NR to become normal in FM radio broadcasts and some tuners and amplifiers were manufactured with decoding circuitry; there were also some tape recorders with a Dolby B "pass-through" mode. In 1971 WFMT started to transmit programs with Dolby NR,[11] and soon some 17 stations broadcast with noise reduction, but by 1974 it was already on the decline.[12] Dolby FM was based on Dolby B,[13] but used a modified 25 μs pre-emphasis time constant[14] and a frequency-selective companding arrangement to reduce noise. A similar system named High Com FM was evaluated in Germany between July 1979 and December 1981 by IRT,[15] and field-trialed up to 1984. It was based on the Telefunken High Com broadband compander system, but never introduced commercially in FM broadcasting.[16] Another competing system was FMX, which was based on CX.  RMSA fully Dolby B-compatible compander was developed and used on many tape recorders in the former German Democratic Republic in the 1980s. It was called RMS (from Rauschminderungssystem, English: "Noise reduction system").[17] Dolby C  The Dolby C-type noise reduction system was developed in 1980.[18][19][20] It provides about 15 dB noise reduction (A-weighted) in the 2 kHz to 8 kHz region where the ear is highly sensitive and most tape hiss is concentrated. Its noise reduction effect results from the dual-level (consisting of a high-level stage and a low-level stage) staggered action arrangement of series-connected compressors and expanders, with an extension to lower frequencies than with Dolby B. As in Dolby B, a "sliding band" technique (operating frequency varies with signal level) helps to suppress undesirable breathing, which is often a problem with other noise reduction techniques. As a result of the extra signal processing, Dolby C-type recordings will sound distorted when played back on equipment that does not have the required Dolby C decoding circuitry. Some of this harshness can be mitigated by using Dolby B on playback, which serves to reduce the strength of the high frequencies. With Dolby C-type processing, noise reduction begins two octaves lower in frequency in an attempt to maintain a psychoacoustically-uniform noise floor. In the region above 8 kHz, where the ear is less sensitive to noise, special spectral-skewing and anti-saturation networks come into play. These circuits prevent cross modulation of low frequencies with high frequencies, suppress tape saturation when large signal transients are present, and increase the effective headroom of the cassette tape system. As a result, recordings are cleaner and crisper with a much improved high-frequency response that the cassette medium heretofore lacked. With a good quality tape, the Dolby C response could be flat to 20 kHz at the 0 dB recording level, a previously unattainable result. An A-weighted signal-to-noise ratio of 72 dB (re 3% THD at 400 Hz) with no unwanted "breathing" effects, even on difficult-to-record passages, was possible. Dolby C first appeared on higher-end cassette decks in the 1980s. The first commercially available cassette deck with Dolby C was the NAD 6150C, which came onto the market around 1981. Dolby C was also used on professional video equipment for the audio tracks of the Betacam and Umatic SP videocassette formats. In Japan, the first cassette deck with Dolby C was the AD-FF5 from Aiwa. Cassette decks with Dolby C also included Dolby B for backward compatibility, and were usually labeled as having "Dolby B-C NR". Dolby SRThe Dolby SR (Spectral Recording) system, introduced in 1986, was the company's second professional noise reduction system. It is a much more aggressive noise reduction approach than Dolby A. It attempts to maximize the recorded signal at all times using a complex series of filters that change according to the input signal. As a result, Dolby SR is much more expensive to implement than Dolby B or C, but Dolby SR is capable of providing up to 25 dB of noise reduction in the high-frequency range. It is only found on professional recording equipment.[1][21] In the motion picture industry, as far as it concerns distribution prints of movies, the Dolby A and SR markings refer to Dolby Surround which is not just a method of noise reduction, but more importantly encodes two additional audio channels on the standard optical soundtrack, giving left, center, right, and surround. SR prints are fairly well backward compatible with old Dolby A equipment. The Dolby SR-D marking refers to both analog Dolby SR and digital Dolby Digital soundtracks on one print. Dolby S Dolby S was introduced in 1989. It was intended that Dolby S would become standard on commercial pre-recorded music cassettes in much the same way that Dolby B had in the 1970s, but it came to market when the Compact Cassette was being replaced by the compact disc as the dominant mass market music format. Dolby Labs claimed that most members of the general public could not differentiate between the sound of a CD and a Dolby S encoded cassette. Dolby S mostly appeared on high-end audio equipment and was never widely used. Dolby S is much more resistant to playback problems caused by noise from the tape transport mechanism than Dolby C. Likewise, Dolby S was also claimed to have playback compatibility with Dolby B in that a Dolby S recording could be played back on older Dolby B equipment with some benefit being realized. It is basically a cut-down version of Dolby SR and uses many of the same noise reduction techniques. Dolby S is capable of 10 dB of noise reduction at low frequencies and up to 24 dB of noise reduction at high frequencies.[23] Dolby HX/HX-Pro Magnetic tape is inherently non-linear in nature due to hysteresis of the magnetic material. If an analog signal were recorded directly onto magnetic tape, its reproduction would be extremely distorted due to this non-linearity. To overcome this, a high-frequency signal, known as bias, is mixed in with the recorded signal, which "pushes" the envelope of the signal into the linear region. If the audio signal contains strong high-frequency content (in particular from percussion instruments such as hi-hat cymbals), this adds to the constant bias causing magnetic saturation on the tape. Dynamic, or adaptive, biasing automatically reduces the bias signal in the presence of strong high-frequency signals, making it possible to record at a higher signal level. The original Dolby HX, where HX stands for Headroom eXtension, was invented in 1979 by Kenneth Gundry of Dolby Laboratories, and was rejected by the industry for its inherent flaws. Bang & Olufsen continued work in the same direction, which resulted in a 1981 patent (EP 0046410) by Jørgen Selmer Jensen.[24] Bang & Olufsen immediately licensed HX-Pro to Dolby Laboratories, stipulating a priority period of several years for use in consumer products, to protect their own Beocord 9000 cassette tape deck.[25][26] By the middle of the 1980s the Bang & Olufsen system, marketed through Dolby Laboratories, became an industry standard under the name of Dolby HX Pro. HX-Pro only applies during the recording process. The improved signal-to-noise ratio is available no matter which tape deck the tape is played back on, and therefore HX-Pro is not a noise-reduction system in the same way as Dolby A, B, C, and S, although it does help to improve noise reduction encode/decode tracking accuracy by reducing tape non-linearity. Some record companies issued HX-Pro pre-recorded cassette tapes during the late 1980s and early 1990s. TodayThe widespread proliferation of digital audio in professional and consumer applications (e.g., compact discs, music download, music streaming) has made analog audio production less prevalent and therefore changed Dolby's focus on Dolby Vision, but Dolby's analog noise reduction systems are still widely used in niche analog production environments.[citation needed] See also

References

External links

Wikimedia Commons has media related to Dolby noise reduction. |

||||||||||||||||||