|

Age of Earth

The age of Earth is estimated to be 4.54 ± 0.05 billion years (4.54 × 109 years ± 1%).[1][2][3][4] This age may represent the age of Earth's accretion, or core formation, or of the material from which Earth formed.[2] This dating is based on evidence from radiometric age-dating of meteorite[5] material and is consistent with the radiometric ages of the oldest-known terrestrial material[6] and lunar samples.[7] Following the development of radiometric age-dating in the early 20th century, measurements of lead in uranium-rich minerals showed that some were in excess of a billion years old.[8] The oldest such minerals analyzed to date—small crystals of zircon from the Jack Hills of Western Australia—are at least 4.404 billion years old.[6][9][10] Calcium–aluminium-rich inclusions—the oldest known solid constituents within meteorites that are formed within the Solar System—are 4.567 billion years old,[11][12] giving a lower limit for the age of the Solar System. It is hypothesised that the accretion of Earth began soon after the formation of the calcium-aluminium-rich inclusions and the meteorites. Because the time this accretion process took is not yet known, and predictions from different accretion models range from a few million up to about 100 million years, the difference between the age of Earth and of the oldest rocks is difficult to determine. It is also difficult to determine the exact age of the oldest rocks on Earth, exposed at the surface, as they are aggregates of minerals of possibly different ages. Development of modern geologic concepts

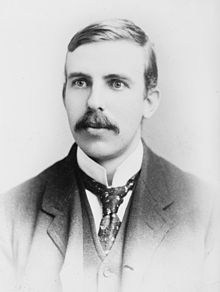

Studies of strata—the layering of rocks and soil—gave naturalists an appreciation that Earth may have been through many changes during its existence. These layers often contained fossilized remains of unknown creatures, leading some to interpret a progression of organisms from layer to layer.[13][14] Nicolas Steno in the 17th century was one of the first naturalists to appreciate the connection between fossil remains and strata.[14] His observations led him to formulate important stratigraphic concepts (i.e., the "law of superposition" and the "principle of original horizontality").[15] In the 1790s, William Smith hypothesized that if two layers of rock at widely differing locations contained similar fossils, then it was very plausible that the layers were the same age.[16] Smith's nephew and student, John Phillips, later calculated by such means that Earth was about 96 million years old.[17] In the mid-18th century, the naturalist Mikhail Lomonosov suggested that Earth had been created separately from, and several hundred thousand years before, the rest of the universe.[citation needed] Lomonosov's ideas were mostly speculative.[citation needed] In 1779 the Comte du Buffon tried to obtain a value for the age of Earth using an experiment: he created a small globe that resembled Earth in composition and then measured its rate of cooling. This led him to estimate that Earth was about 75,000 years old.[18] Other naturalists used these hypotheses to construct a history of Earth, though their timelines were inexact as they did not know how long it took to lay down stratigraphic layers.[15] In 1830, geologist Charles Lyell, developing ideas found in James Hutton's works, popularized the concept that the features of Earth were in perpetual change, eroding and reforming continuously, and the rate of this change was roughly constant. This was a challenge to the traditional view, which saw the history of Earth as dominated by intermittent catastrophes. Many naturalists were influenced by Lyell to become "uniformitarians" who believed that changes were constant and uniform.[citation needed] Early calculationsIn 1862, the physicist William Thomson, 1st Baron Kelvin published calculations that fixed the age of Earth at between 20 million and 400 million years.[19][20] He assumed that Earth had formed as a completely molten object, and determined the amount of time it would take for the near-surface temperature gradient to decrease to its present value. His calculations did not account for heat produced via radioactive decay (a then unknown process) or, more significantly, convection inside Earth, which allows the temperature in the upper mantle to remain high much longer, maintaining a high thermal gradient in the crust much longer.[19] Even more constraining were Thomson's estimates of the age of the Sun, which were based on estimates of its thermal output and a theory that the Sun obtains its energy from gravitational collapse; Thomson estimated that the Sun is about 20 million years old.[21][22]  Geologists such as Lyell had difficulty accepting such a short age for Earth. For biologists, even 100 million years seemed much too short to be plausible. In Charles Darwin's theory of evolution, the process of random heritable variation with cumulative selection requires great durations of time, and Darwin stated that Thomson's estimates did not appear to provide enough time.[23] According to modern biology, the total evolutionary history from the beginning of life to today has taken place since 3.5 to 3.8 billion years ago, the amount of time which passed since the last universal ancestor of all living organisms as shown by geological dating.[24] In a lecture in 1869, Darwin's great advocate, Thomas Henry Huxley, attacked Thomson's calculations, suggesting they appeared precise in themselves but were based on faulty assumptions. The physicist Hermann von Helmholtz (in 1856) and astronomer Simon Newcomb (in 1892) contributed their own calculations of 22 and 18 million years, respectively, to the debate: they independently calculated the amount of time it would take for the Sun to condense down to its current diameter and brightness from the nebula of gas and dust from which it was born.[25] Their values were consistent with Thomson's calculations. However, they assumed that the Sun was only glowing from the heat of its gravitational contraction. The process of solar nuclear fusion was not yet known to science. In 1892, Thomson was ennobled as Lord Kelvin in appreciation of his many scientific accomplishments. In 1895 John Perry challenged Kelvin's figure on the basis of his assumptions on conductivity, and Oliver Heaviside entered the dialogue, considering it "a vehicle to display the ability of his operator method to solve problems of astonishing complexity."[26] Other scientists backed up Kelvin's figures. Darwin's son, the astronomer George H. Darwin, proposed that Earth and Moon had broken apart in their early days when they were both molten. He calculated the amount of time it would have taken for tidal friction to give Earth its current 24-hour day. His value of 56 million years was additional evidence that Thomson was on the right track.[25] The last estimate Kelvin gave, in 1897, was: "that it was more than 20 and less than 40 million year old, and probably much nearer 20 than 40".[27] In 1899 and 1900, John Joly calculated the rate at which the oceans should have accumulated salt from erosion processes and determined that the oceans were about 80 to 100 million years old.[25] Radiometric datingOverviewBy their chemical nature, rock minerals contain certain elements and not others; but in rocks containing radioactive isotopes, the process of radioactive decay generates exotic elements over time. By measuring the concentration of the stable end product of the decay, coupled with knowledge of the half life and initial concentration of the decaying element, the age of the rock can be calculated.[28] Typical radioactive end products are argon from decay of potassium-40, and lead from decay of uranium and thorium.[28] If the rock becomes molten, as happens in Earth's mantle, such nonradioactive end products typically escape or are redistributed.[28] Thus the age of the oldest terrestrial rock gives a minimum for the age of Earth, assuming that no rock has been intact for longer than Earth itself. Convective mantle and radioactivityThe discovery of radioactivity introduced another factor in the calculation. After Henri Becquerel's initial discovery in 1896,[29][30][31][32] Marie and Pierre Curie discovered the radioactive elements polonium and radium in 1898;[33] and in 1903, Pierre Curie and Albert Laborde announced that radium produces enough heat to melt its own weight in ice in less than an hour.[34] Geologists quickly realized that this upset the assumptions underlying most calculations of the age of Earth. These had assumed that the original heat of Earth and the Sun had dissipated steadily into space, but radioactive decay meant that this heat had been continually replenished. George Darwin and John Joly were the first to point this out, in 1903.[35] Invention of radiometric datingRadioactivity, which had overthrown the old calculations, yielded a bonus by providing a basis for new calculations, in the form of radiometric dating.  Ernest Rutherford and Frederick Soddy jointly had continued their work on radioactive materials and concluded that radioactivity was caused by a spontaneous transmutation of atomic elements. In radioactive decay, an element breaks down into another, lighter element, releasing alpha, beta, or gamma radiation in the process. They also determined that a particular isotope of a radioactive element decays into another element at a distinctive rate. This rate is given in terms of a "half-life", or the amount of time it takes half of a mass of that radioactive material to break down into its "decay product". Some radioactive materials have short half-lives; some have long half-lives. Uranium and thorium have long half-lives and so persist in Earth's crust, but radioactive elements with short half-lives have generally disappeared. This suggested that it might be possible to measure the age of Earth by determining the relative proportions of radioactive materials in geological samples. In reality, radioactive elements do not always decay into nonradioactive ("stable") elements directly, instead, decaying into other radioactive elements that have their own half-lives and so on, until they reach a stable element. These "decay chains", such as the uranium-radium and thorium series, were known within a few years of the discovery of radioactivity and provided a basis for constructing techniques of radiometric dating. The pioneers of radioactivity were chemist Bertram B. Boltwood and physicist Rutherford. Boltwood had conducted studies of radioactive materials as a consultant, and when Rutherford lectured at Yale in 1904,[36] Boltwood was inspired to describe the relationships between elements in various decay series. Late in 1904, Rutherford took the first step toward radiometric dating by suggesting that the alpha particles released by radioactive decay could be trapped in a rocky material as helium atoms. At the time, Rutherford was only guessing at the relationship between alpha particles and helium atoms, but he would prove the connection four years later. Soddy and Sir William Ramsay had just determined the rate at which radium produces alpha particles, and Rutherford proposed that he could determine the age of a rock sample by measuring its concentration of helium. He dated a rock in his possession to an age of 40 million years by this technique. Rutherford wrote of addressing a meeting of the Royal Institution in 1904:

Rutherford assumed that the rate of decay of radium as determined by Ramsay and Soddy was accurate and that helium did not escape from the sample over time. Rutherford's scheme was inaccurate, but it was a useful first step. Boltwood focused on the end products of decay series. In 1905, he suggested that lead was the final stable product of the decay of radium. It was already known that radium was an intermediate product of the decay of uranium. Rutherford joined in, outlining a decay process in which radium emitted five alpha particles through various intermediate products to end up with lead, and speculated that the radium–lead decay chain could be used to date rock samples. Boltwood did the legwork and by the end of 1905 had provided dates for 26 separate rock samples, ranging from 92 to 570 million years. He did not publish these results, which was fortunate because they were flawed by measurement errors and poor estimates of the half-life of radium. Boltwood refined his work and finally published the results in 1907.[8] Boltwood's paper pointed out that samples taken from comparable layers of strata had similar lead-to-uranium ratios, and that samples from older layers had a higher proportion of lead, except where there was evidence that lead had leached out of the sample. His studies were flawed by the fact that the decay series of thorium was not understood, which led to incorrect results for samples that contained both uranium and thorium. However, his calculations were far more accurate than any that had been performed to that time. Refinements in the technique would later give ages for Boltwood's 26 samples of 410 million to 2.2 billion years.[8] Arthur Holmes establishes radiometric datingAlthough Boltwood published his paper in a prominent geological journal, the geological community had little interest in radioactivity.[citation needed] Boltwood gave up work on radiometric dating and went on to investigate other decay series. Rutherford remained mildly curious about the issue of the age of Earth but did little work on it. Robert Strutt tinkered with Rutherford's helium method until 1910 and then ceased. However, Strutt's student Arthur Holmes became interested in radiometric dating and continued to work on it after everyone else had given up. Holmes focused on lead dating because he regarded the helium method as unpromising. He performed measurements on rock samples and concluded in 1911 that the oldest (a sample from Ceylon) was about 1.6 billion years old.[38] These calculations were not particularly trustworthy. For example, he assumed that the samples had contained only uranium and no lead when they were formed. More important research was published in 1913. It showed that elements generally exist in multiple variants with different masses, or "isotopes". In the 1930s, isotopes would be shown to have nuclei with differing numbers of the neutral particles known as "neutrons". In that same year, other research was published establishing the rules for radioactive decay, allowing more precise identification of decay series. Many geologists felt these new discoveries made radiometric dating so complicated as to be worthless.[citation needed] Holmes felt that they gave him tools to improve his techniques, and he plodded ahead with his research, publishing before and after the First World War. His work was generally ignored until the 1920s, though in 1917 Joseph Barrell, a professor of geology at Yale, redrew geological history as it was understood at the time to conform to Holmes's findings in radiometric dating. Barrell's research determined that the layers of strata had not all been laid down at the same rate, and so current rates of geological change could not be used to provide accurate timelines of the history of Earth.[citation needed] Holmes' persistence finally began to pay off in 1921, when the speakers at the yearly meeting of the British Association for the Advancement of Science came to a rough consensus that Earth was a few billion years old and that radiometric dating was credible. Holmes published The Age of the Earth, an Introduction to Geological Ideas in 1927 in which he presented a range of 1.6 to 3.0 billion years. No great push to embrace radiometric dating followed, however, and the die-hards in the geological community stubbornly resisted. They had never cared for attempts by physicists to intrude in their domain, and had successfully ignored them so far.[39] The growing weight of evidence finally tilted the balance in 1931, when the National Research Council of the US National Academy of Sciences decided to resolve the question of the age of Earth by appointing a committee to investigate. Holmes, being one of the few people who was trained in radiometric dating techniques, was a committee member and in fact wrote most of the final report.[40] Thus, Holmes' report concluded that radioactive dating was the only reliable means of pinning down a geologic time scale. Questions of bias were deflected by the great and exacting detail of the report. It described the methods used, the care with which measurements were made, and their error bars and limitations.[citation needed] Modern radiometric datingRadiometric dating continues to be the predominant way scientists date geologic time scales. Techniques for radioactive dating have been tested and fine-tuned on an ongoing basis since the 1960s. Forty or so different dating techniques have been utilized to date, working on a wide variety of materials. Dates for the same sample using these different techniques are in very close agreement on the age of the material.[citation needed] Possible contamination problems do exist, but they have been studied and dealt with by careful investigation, leading to sample preparation procedures being minimized to limit the chance of contamination.[citation needed] Use of meteoritesAn age of 4.55 ± 0.07 billion years, very close to today's accepted age, was determined by Clair Cameron Patterson using uranium–lead isotope dating (specifically lead–lead dating) on several meteorites including the Canyon Diablo meteorite and published in 1956.[41] The quoted age of Earth is derived, in part, from the Canyon Diablo meteorite for several important reasons and is built upon a modern understanding of cosmochemistry built up over decades of research.  Most geological samples from Earth are unable to give a direct date of the formation of Earth from the solar nebula because Earth has undergone differentiation into the core, mantle, and crust, and this has then undergone a long history of mixing and unmixing of these sample reservoirs by plate tectonics, weathering and hydrothermal circulation. All of these processes may adversely affect isotopic dating mechanisms because the sample cannot always be assumed to have remained as a closed system, by which it is meant that either the parent or daughter nuclide (a species of atom characterised by the number of neutrons and protons an atom contains) or an intermediate daughter nuclide may have been partially removed from the sample, which will skew the resulting isotopic date. To mitigate this effect it is usual to date several minerals in the same sample, to provide an isochron. Alternatively, more than one dating system may be used on a sample to check the date. Some meteorites are furthermore considered to represent the primitive material from which the accreting solar disk was formed.[42] Some have behaved as closed systems (for some isotopic systems) soon after the solar disk and the planets formed.[citation needed] To date, these assumptions are supported by much scientific observation and repeated isotopic dates, and it is certainly a more robust hypothesis than that which assumes a terrestrial rock has retained its original composition. Nevertheless, ancient Archaean lead ores of galena have been used to date the formation of Earth as these represent the earliest formed lead-only minerals on the planet and record the earliest homogeneous lead–lead isotope systems on the planet. These have returned age dates of 4.54 billion years with a precision of as little as 1% margin for error.[43] Statistics for several meteorites that have undergone isochron dating are as follows:[44]

Canyon Diablo meteorite The Canyon Diablo meteorite was used because it is both large and representative of a particularly rare type of meteorite that contains sulfide minerals (particularly troilite, FeS), metallic nickel-iron alloys, plus silicate minerals. This is important because the presence of the three mineral phases allows investigation of isotopic dates using samples that provide a great separation in concentrations between parent and daughter nuclides. This is particularly true of uranium and lead. Lead is strongly chalcophilic and is found in the sulfide at a much greater concentration than in the silicate, versus uranium. Because of this segregation in the parent and daughter nuclides during the formation of the meteorite, this allowed a much more precise date of the formation of the solar disk and hence the planets than ever before.  The age determined from the Canyon Diablo meteorite has been confirmed by hundreds of other age determinations, from both terrestrial samples and other meteorites.[45] The meteorite samples, however, show a spread from 4.53 to 4.58 billion years ago. This is interpreted as the duration of formation of the solar nebula and its collapse into the solar disk to form the Sun and the planets. This 50 million year time span allows for accretion of the planets from the original solar dust and meteorites. The Moon, as another extraterrestrial body that has not undergone plate tectonics and that has no atmosphere, provides quite precise age dates from the samples returned from the Apollo missions. Rocks returned from the Moon have been dated at a maximum of 4.51 billion years old. Martian meteorites that have landed upon Earth have also been dated to around 4.5 billion years old by lead–lead dating. Lunar samples, since they have not been disturbed by weathering, plate tectonics or material moved by organisms, can also provide dating by direct electron microscope examination of cosmic ray tracks. The accumulation of dislocations generated by high energy cosmic ray particle impacts provides another confirmation of the isotopic dates. Cosmic ray dating is only useful on material that has not been melted, since melting erases the crystalline structure of the material, and wipes away the tracks left by the particles. Altogether, the concordance of age dates of both the earliest terrestrial lead reservoirs and all other reservoirs within the Solar System found to date are used to support the fact that Earth and the rest of the Solar System formed at around 4.53 to 4.58 billion years ago.[citation needed] See alsoReferences

Bibliography

Further reading

External links

|

||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||